Control theory has produced, since the 1950s, a wealth of feedback designs with rigorous guarantees of stability, performance, robustness, and optimality. Some of these feedback laws are very complex and require intense numerical computations online.

Neural operators, a branch of Machine Learning with sophisticated tools and theory to approximate infinite-dimensional nonlinear mappings, offers a way to speed up the online implementation by an order of 1,000x, eliminating numerical computations by function evaluations, using NN approximations of the operators.

In about 2022 a line of research emerged in our group, with collaborators at UC San Diego (Yuanyuan Shi and Luke Bhan) and elsewhere, to facilitate the implementation of complex feedback laws using neural operators. This research, while incorporating the usual machine learning aspects (generation of a training set by numerical computation offline, training a neural network) is also intensely theoretical. It produces guarantees that stability, performance, and robustness guarantees, present in classical control-theoretic work, are retained even under NN approximations.

Arguably, some of the most complex feedback laws out there are for PDEs, nonlinear systems, and delay systems. Our focus is on developing neural operators for such systems.

The implementations are not limited to control laws but include also state estimators (observers), adaptive control, and (nonlinear) gain scheduling.

The key components of this research are:

- Defining nonlinear operators that need to be approximated.

- Establishing the continuity (and even Lipschitzness) of these nonlinear infinite-dimensional mappings.

- Proving guarantees of Lyapunov stability, performance, and robustness under the NN approximations.

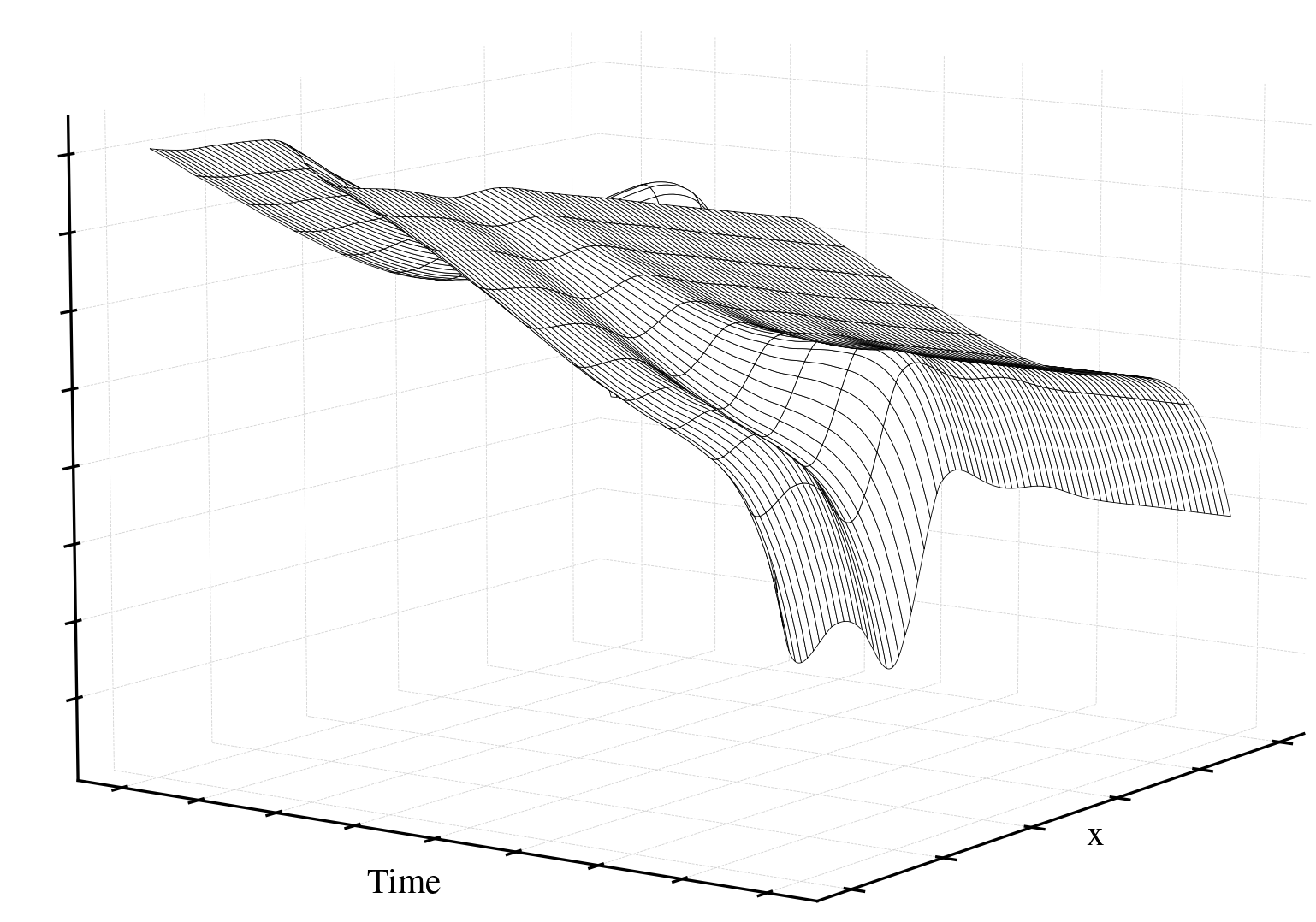

- Computational illustrations of training the neural operators and their performance in the feedback loop.

The unique and mathematically “juiciest” of these four components is component 2.

📌 Check this page occasionally for new developments in this evolving field.

| Topic | Journal papers (our group) | Conference papers (our group) | Papers by other goups |

|---|---|---|---|

| Hyperbolic | [J1, J2, J4, J5, J6, J8, J10, J11] | [C1, C2, C3, C4, C5, C6, C7, C8, C9] | [A1, A2, A5, A6, A7, A8, A9] |

| Parabolic | [J1, J3, J5, J9] | [C1, C6, C8] | [A3, A4] |

| Adaptive | [J1, J5, J7] | [C2] | [A1, A6] |

| GS | [J1, J6] | ||

| Delays | [J1, J4, J9, J11] | [C8, C9] | [A7] |

| Observers | [J3] | [C1, C5] | |

| Traffic Applications | [C1] | [A1, A8, A9] | |

| Biological and Chemical Applications | [J3, J5, J9] | [C1] | [A3, A7] |

| Robotics Applications | [A10] |

- M. Krstic, “Machine learning: Bane or boon for control?,” IEEE Control Systems, vol. 44, pp. 24-37, 2024.

- L. Bhan, Y. Shi, and M. Krstic, “Neural operators for bypassing gain and control computations in PDE backstepping,” IEEE Transactions on Automatic Control, vol. 69, pp. 5310-5325, 2024.

- M. Krstic, L. Bhan, Y. Shi, "Neural operators of backstepping controller and observer gain functions for reaction-diffusion PDEs,” Automatica, paper 111649, 2024.

- J. Qi, J. Zhang, and M. Krstic, “Neural operators for PDE backstepping control of first-order hyperbolic PIDE with recycle and delay,” System & Control Letters, paper 105714, 2024.

- L. Bhan, Y. Shi, and M. Krstic, “Adaptive control of reaction-diffusion PDEs via neural operator-approximated gain kernels,” Systems & Control Letters, vol. 195, paper 105968, 2025.

- M. Lamarque, L. Bhan, R. Vazquez, and M. Krstic, “Gain scheduling with a neural operator for a transport PDE with nonlinear recirculation,” IEEE Transactions on Automatic Control, to appear.

- M. Lamarque, L. Bhan, Y. Shi, and M. Krstic, “Adaptive neural-operator backstepping control of a benchmark hyperbolic PDE,” vol. 177, paper 112329, Automatica, 2025.

- S.-S. Wang, M. Diagne, and M. Krstic, “Backstepping neural operators for 2x2 hyperbolic PDEs,” vol. 178, paper 112351, Automatica, 2025.

- S.-S. Wang, M. Diagne, and M. Krstic, “Deep learning of delay-compensated backstepping for reaction-diffusion PDEs,” IEEE Transactions on Automatic Control, to appear.

- R. Vazquez and M. Krstic, “Gain-only neural operators for PDE backstepping,” Chinese Annals of Mathematics, Ser. B, invited article for special issue dedicated to Jean-Michel Coron, under review.

- J. Qi, J. Hu, J. Zhang, and M. Krstic, “Neural operator feedback for a first-order PIDE with spatially-varying state delay,” IEEE Transactions on Automatic Control, under review.

- Y. Shi, Z. Li, H. Yu, D. Steeves, A. Anandkumar, and M. Krstic, "Machine Learning Accelerated PDE Backstepping Observers," IEEE Conference on Decision and Control, 2022.

- L. Bhan, Y. Shi, and M. Krstic, "Operator Learning for Nonlinear Adaptive Control," Learning for Dynamics and Control Conference, 2023.

- L. Bhan, Y. Shi, and M. Krstic, "Neural operators for hyperbolic PDE backstepping kernels,” IEEE Conference on Decision and Control, 2023.

- L. Bhan, Y. Shi, and M. Krstic, "Neural operators for hyperbolic PDE backstepping feedback laws,” IEEE Conference on Decision and Control, 2023.

- S.-S. Wang, M. Diagne, and M. Krstic, "Neural operator approximations of backstepping kernels for 2x2 hyperbolic PDEs," American Control Conference, 2024.

- L. Bhan, Y. Shi, I. Karafyllis, M. Krstic, and J. B. Rawlings, “Moving-horizon estimators for hyperbolic and parabolic PDEs in 1-D," American Control Conference, 2024.

- R. Vazquez and M. Krstic, “Gain-only neural operator approximators of PDE backstepping controllers,” European Control Conference, 2024.

- S.-S. Wang, M. Diagne, and M. Krstic, “Numerical implementation of deep neural PDE backstepping control of reaction-diffusion PDEs with delay,” Modeling, Estimation and Control Conference, 2024.

- L. Bhan, P. Qin, M. Krstic, and Y. Shi, “Neural Operators for predictor feedback control of nonlinear delay systems,” Learning for Dynamics and Control Conference, 2025.

- K. Lv, J. Wang, Y. Zhang, and H. Yu, “Neural Operators for Adaptive Control of Freeway Traffic,” arXiv preprint arXiv:2410.20708, 2024.

- Y. Zhang, J. Auriol, and H. Yu, “Operator Learning for Robust Stabilization of Linear Markov-Jumping Hyperbolic PDEs,” arXiv preprint arXiv:2412.09019, 2024.

- Y. Jiang, J. Wang, “Neural operators of backstepping controller gain kernels for an ODE cascaded with a reaction-diffusion equation,” 43rd Chinese Control Conference (CCC), 2024.

- K. Lv, J. Wang, Y. Cao, “Neural Operator Approximations for Boundary Stabilization of Cascaded Parabolic PDEs," International Journal of Adaptive Control and Signal Processing, 2024.

- Y. Xiao, Y. Yuan, B. Luo, X. Xu, “Neural operators for robust output regulation of hyperbolic PDEs," Neural Networks, Volume 179, 2024.

- X. Zhang, Y. Xiao, X. Xu, and B. Luo, “Intelligent Acceleration Adaptive Control of Linear 2x2 Hyperbolic PDE Systems,” arXiv preprint arXiv:2411.04461, 2024.

- J. Hu, J. Qi, and J. Zhang, “Neural Operator based Reinforcement Learning for Control of First-order PDEs with Spatially-Varying State Delay,” arXiv preprint arXiv:2501.18201, 2025.

- Y. Zhang, J. Auriol, and H. Yu, “Neural-Operator Control for Traffic Flow Models with Stochastic Demand,” in 5th IFAC Workshop on Control of Systems Governed by Partial Differential Equations (CPDE 2025), 2025.

- Y. Zhang, R. Zhong, H. Yu, “Mitigating stop-and-go traffic congestion with operator learning,” Transportation Research Part C: Emerging Technologies, Volume 170, 2025.

- S. Matada, L. Bhan, Y. Shi, N. Atansov, “Generalizable Motion Planning via Operator Learning,” International Conference on Learning Represenations, 2025.